Traditional one-time Copilot training sessions fail to capture how employees use the tool in their daily work, leaving leaders without insight into real adoption and impact. Cognician drives Copilot adoption by embedding generative AI (GenAI) challenges into daily work, enabling ongoing practice and measurable behavior change.

We've all been through a one-and-done training session: You spend a day outside the flow of work, there's a flurry of slides and demos, and then … it's back to business as usual. The problem? For something as transformative as Microsoft Copilot adoption, that approach not only fades fast – it leaves leaders flying blind when it comes to measuring impact. Traditional training might tell you who showed up or who passed a quiz, but it won't reveal what happens when people get back to work.

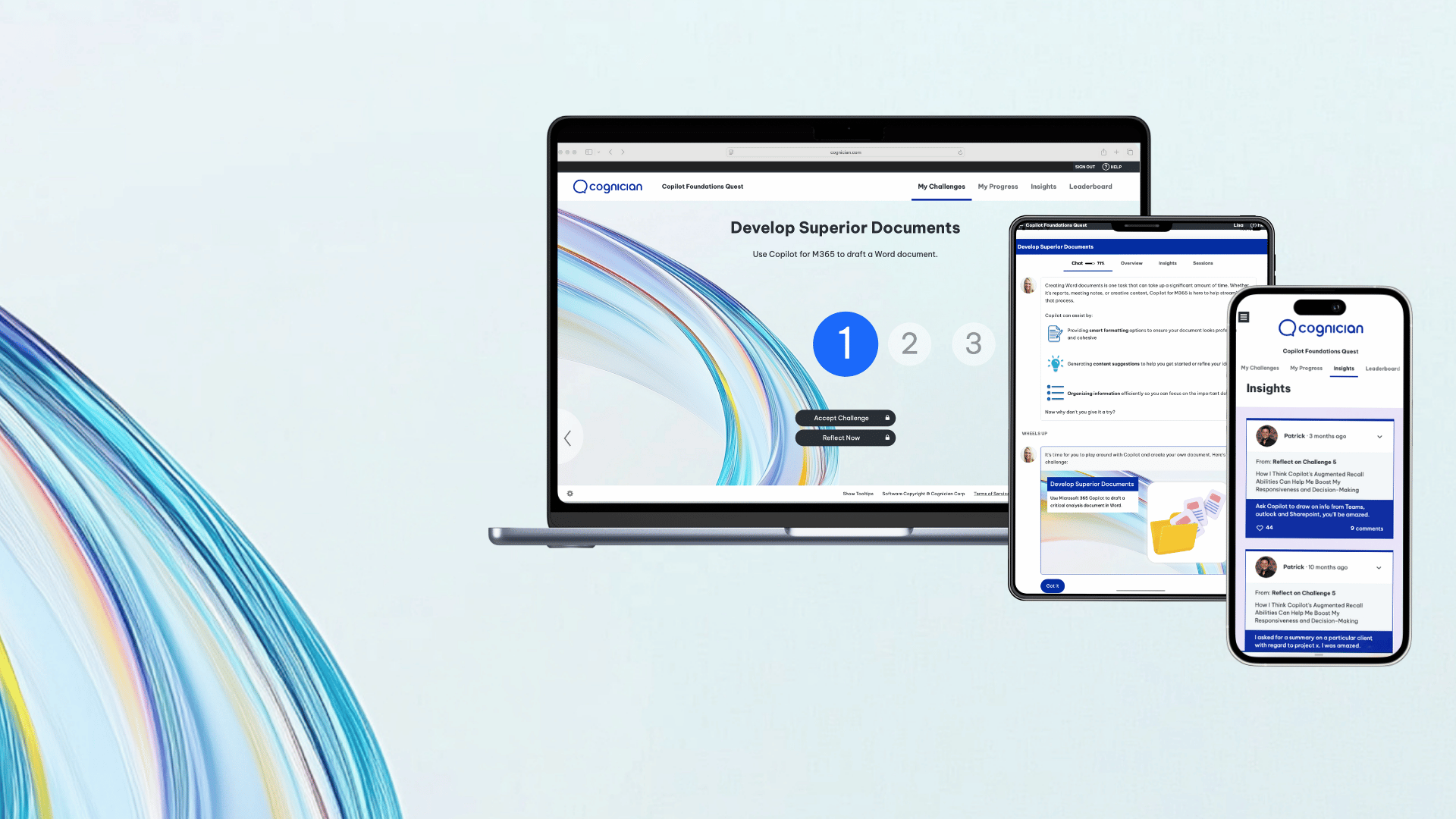

Contrast that with Cognician's approach: giving employees real, on-the-job challenges they can complete in the flow of their work. These work-based challenges with spaced practice let participants experiment with Copilot on real tasks over several weeks and build habits through daily use.

Each challenge isn't just a learning moment – it's a set of data points. When we give employees multiple opportunities to apply Copilot in real scenarios, we can capture rich data that traditional methods simply can't. For example, as participants engage in challenges, we can see how often they try Copilot, how they refine their prompts, and how their confidence grows with each attempt.

One tech client's program lead called this real-time visibility a 'game changer' because, instead of guessing, they could watch adoption unfold and coach accordingly. Participants aren't learning in a vacuum; they're reflecting and sharing. Their own words often reveal a mindset shift – "I used to be skeptical, but now I'm using Copilot daily!" – which static training often fails to create. Better yet, as people post tips and 'Aha!' moments on our Insights boards, we witness peer learning in action. Colleagues pick up each other's best practices, thereby amplifying the impact.

To put a new spin on an old business adage, whatever is measured is what gets done. By weaving Copilot practice into daily work, Cognician measures what truly matters – not just completion rates, but real-world adoption signals. Here's a breakdown of the data dimensions captured by Cognician versus traditional training:

|

Data Dimension |

Cognician |

Traditional Training |

|

Prompt quality |

Reflects how participants improve their prompts over time |

Not directly measurable in real work context |

|

Tool usage patterns |

Tracks the frequency and patterns of Copilot use in daily tasks |

Little to no insight after the initial training session |

|

Mindset shifts |

Captures the changes in attitude and confidence through reflections, as well as assessment and survey data |

Difficult to gauge beyond one-time post-training surveys |

|

Reflection insights |

Collects participants' written insights and 'Aha!' moments |

Rarely captured (no mechanism for ongoing reflection) |

|

Peer learning signals |

Records peer-to-peer tips, comments, and collaboration |

Informal only – not captured or quantified |

In short, traditional training checks a box, whereas Cognician lights a path that can be tracked as participants travel on it.

By engaging users in the flow of work, we generate actionable insight into how they use, improve in, and champion Copilot. These are the metrics that transform a novel tool into a new way of working – the kind of adoption alchemy that you simply can't achieve or measure in a one-and-done class.

.png)

.png)